Exploring Trends of AI and Machine Learning

Artificial intelligence (AI) spans a broad array of techniques and applications aimed at creating systems that can learn, reason, and, in some cases, generate creative outputs. From chatbots and digital assistants to generative AI tools for creating art, music, and video, AI technology is constantly expanding its reach. While data analytics is one use of AI, this thread will cover a wide range of intelligent applications and advancements. Here, I’ll be providing updates on cutting-edge trends in AI, exploring its impact across different fields, and keeping you informed about the latest breakthroughs in the industry.

11/1/2024182 min read

Claude Cowork triggers global sell-off in software shares

Feb 4, 2026

In February 2026, the global technology market was rocked by a historic selloff—widely labeled as the "SaaSpocalypse"—wiping out approximately $285 billion in market capitalization in a single trading session. This financial earthquake was triggered by Anthropic’s release of Claude Code and its non-technical counterpart, Claude Cowork. While Claude Code is an agentic command-line tool that allows developers to delegate complex coding, testing, and debugging tasks directly from their terminal, Claude Cowork brings these same autonomous capabilities to the desktop environment for non-coders. These tools are distinct from traditional chatbots because they possess "agency": they can autonomously plan multi-step workflows, manage local file systems, and use specialized plugins to execute high-value tasks across legal, financial, and sales departments without constant human guidance.

The panic among investors stems from a fundamental shift in the AI narrative: AI is no longer viewed merely as a "copilot" that enhances human productivity, but as a direct substitute for enterprise software and professional services. The release of sector-specific plugins—particularly for legal and financial workflows—caused a sharp decline in stocks like Thomson Reuters (-18%) and Salesforce, as markets feared these autonomous agents would render expensive, "per-seat" software subscriptions obsolete. Investors are increasingly worried that businesses will stop buying specialized SaaS tools if a single AI agent can perform those functions across an operating system, leading to a "get-me-out" style of aggressive selling as the industry's traditional revenue models face an existential threat.

In response to the "SaaSpocalypse" and the rise of autonomous agents like Claude Code, Microsoft and Google are fundamentally restructuring how they charge for software. They are moving away from the decades-old "per-user" model and toward a future where AI agents—not just humans—are the primary billable units. Google is countering the threat by positioning AI as a high-efficiency utility, focusing on aggressive price-performance.

That’s my take on it:

The emergence of autonomous agents like Claude Cowork and Claude Code represents a classic instance of creative destruction. While the "SaaSpocalypse" of early 2026—which saw a massive selloff in traditional software stocks—reflects a period of painful market recalibration, it signals the birth of a more efficient technological era. In the short term, the established order is being disrupted; entry-level programmers and those tethered to legacy SaaS "per-seat" models are facing significant professional friction as AI begins to automate routine coding and administrative workflows. However, this displacement is the precursor to a long-term benefit: the commoditization of software creation. As the cost of building and maintaining code drops toward zero, we will see an explosion of innovation, making high-powered technology accessible to every sector of society at a fraction of its former cost.

I think the trend of agentic, AI-powered coding is both inevitable and irreversible. The writing is on the wall! For professionals and students alike, survival depends on following this trend rather than ignoring or resisting it. Consequently, the burden of adaptation falls heavily on educational institutions. Educators must immediately revamp their curricula to move beyond rote programming exercises and instead equip students with the AI literacy required to guide, audit, and integrate agentic tools. By teaching students to treat AI as a high-level collaborator, we ensure that the next generation of workers is prepared to thrive in an evolving landscape where human ingenuity is amplified, rather than replaced, by machine autonomy.

Merger of SpaceX and xAI may turn science fiction into scientific fact

Feb 3, 2026

On February 2, 2026, SpaceX officially announced its acquisition of xAI, a milestone merger that values the combined entity at approximately $1.25 trillion. This deal consolidates Elon Musk’s aerospace and artificial intelligence ventures, including the social media platform X (which was acquired by xAI in early 2025), into a single "vertically integrated innovation engine." The primary strategic driver for the merger is the development of orbital data centers. By leveraging SpaceX's launch capabilities and Starlink's satellite network, Musk aims to bypass the terrestrial energy and cooling constraints of AI by deploying a constellation of up to one million solar-powered satellites. Musk stated that this move is the first step toward becoming a "Kardashev II-level civilization" capable of harnessing the sun's full power to sustain humanity’s multi-planetary future. Musk predicts that space-based processing will become the most cost-effective solution for AI within the next three years.

Financial analysts view the acquisition as a critical precursor to a highly anticipated SpaceX initial public offering (IPO) expected in early summer 2026. The merger combines SpaceX’s profitable launch business—which generated an estimated $8 billion in profit in 2025—with the high-growth, compute-intensive focus of xAI. While the terms of the deal were not fully disclosed, it was confirmed that xAI shares will be converted into SpaceX stock. This consolidation also clarifies the landscape for Tesla investors, as Tesla’s recent $2 billion investment in xAI now translates into an indirect stake in the newly formed space-AI giant.

This is my take on it:

Despite the ambitious vision, the technology for massive orbital computing remains largely untested. In a terrestrial data center, servers are often refreshed every 18 to 36 months to keep up with AI chip advancements. In space, if you cannot upgrade, your billion-dollar hardware becomes a "stranded asset"—obsolete before it even pays for itself. To avoid the "Obsolescence Trap," the industry is shifting away from static satellites toward modular, "plug-and-play" architectures. The primary solution lies in Robotic Servicing Vehicles (RSVs), which act as autonomous space-technicians capable of performing high-precision house calls.

Elon Musk’s track record suggests that betting against his vision is often a losing proposition. While critics have frequently dismissed his technological ambitions for Tesla and SpaceX as unachievable science fiction, he has consistently defied expectations by delivering on milestones that were once deemed impossible. A prime example is the development of reusable rockets, such as the Falcon 9 and Falcon Heavy, which transformed spaceflight from a government-funded luxury into a commercially viable enterprise by drastically reducing launch costs. Similarly, his push for Full Self-Driving (FSD) technology and the massive success of the Model 3, which proved that electric vehicles could be both high-performance and mass-marketable, fundamentally disrupted the global automotive industry. Other breakthroughs, like the rapid deployment of the Starlink satellite constellation providing global high-speed internet and the Neuralink brain-computer interface reaching human trial stages, further demonstrate his ability to bridge the gap between radical theory and functional reality.

The acquisition and merger of SpaceX and xAI represent another "moonshot" that requires the same level of audacity. We need visionary risk-takers to advance our civilization, as they are the ones who push the boundaries of what is possible. By daring to move AI infrastructure into orbit, Musk is attempting to solve the energy and cooling crises of terrestrial computing in one bold stroke. I sincerely hope that he is right once again; it is this willingness to embrace extreme risk that turns the science fiction of today into the scientific facts of tomorrow.

OpenClaw and the rise of fully autonomous personal AI

OpenClaw (formerly known as Clawdbot and Moltbot) is a viral, open-source AI assistant that has quickly become a sensation in the tech world for its ability to act as a "proactive" agent rather than a passive chatbot. Developed by Austrian engineer Peter Steinberger, the app distinguishes itself by living inside the messaging platforms you already use, such as WhatsApp, Telegram, iMessage, and Slack. Unlike traditional AI tools that merely generate text, Moltbot is designed to "actually do things" by connecting directly to your local machine or a server. It can manage calendars, triage emails, execute terminal commands, and even perform web automation—all while maintaining a persistent memory that allows it to remember preferences and context across different conversations and weeks of interaction.

The app's rapid rise in early 2026 was accompanied by a high-profile rebranding saga. It was originally launched under the name Clawdbot (with its AI persona named Clawd), a clever play on Anthropic's "Claude" models. However, following a trademark dispute where Anthropic requested a name change to avoid brand confusion, the project was renamed to Moltbot. The creator chose this name as a metaphor for a lobster "molting" its old shell to grow bigger and stronger, and the AI persona was subsequently renamed Molty. Shortly afterward, the maintainers settled on the name OpenClaw as the final rebrand to avoid further legal confusion and to establish a clear, model-agnostic identity for the project. Despite the name change, the project’s momentum continued, amassing over 100,000 GitHub stars and drawing praise from industry figures like Andrej Karpathy for its innovative "local-first" approach to personal productivity.

That’s my take on it:

OpenClaw is powerful enough to function as a true personal assistant—or even a research assistant—rather than just another conversational AI. Its value lies in what it can do, not merely what it can say, and the range of use cases is broad.

Take travel planning as a concrete example. Shibuya Sky is one of the most sought-after attractions in Tokyo. Unlike most observation decks, where glass panels partially obstruct the view, Shibuya Sky offers an open, unobstructed skyline. Sunset is the most coveted time slot, and tickets are released exactly two weeks in advance at midnight Japan time. Those sunset tickets typically sell out within minutes. Instead of staying up late and refreshing a ticketing site, a user could simply instruct OpenClaw to monitor the release and purchase the tickets automatically.

Another use case lies in finance. Most people are not professional investors and do not have the time—or expertise—to continuously track stock markets, corporate earnings reports, macroeconomic signals, and emerging technology trends. OpenClaw can be delegated these tedious and information-heavy tasks, monitoring relevant data streams and even executing buy-and-sell decisions on the user’s behalf based on predefined rules or strategies.

That said, the risks are real and cannot be ignored. AI systems still make serious mistakes, especially when they misinterpret intent or context. Imagine OpenClaw sending a Valentine’s Day dinner invitation to a female subordinate simply because you once praised her work by saying, “I love it.” What the system reads as enthusiasm could quickly escalate into a Title IX complaint—or worse, a lawsuit.

The stakes are high because OpenClaw, once installed on your computer, can potentially access and control everything you do. For this reason, some experts recommend running it in a tightly controlled environment: a separate machine, a fresh email account, and carefully scoped permissions. However, there is an unavoidable trade-off. The more you restrict OpenClaw’s access, the safer it becomes—but the more its capabilities shrink. At that point, it starts to resemble just another constrained agentic AI, rather than the deeply integrated assistant that makes it compelling in the first place.

In short, OpenClaw’s power is exactly what makes it both exciting and risky—and using it well requires thoughtful boundaries, not blind trust.

Link: https://openclaw.ai/

Google’s Chrome–Gemini integration yields mixed results

Jan 30, 2026

Recently Google updated the integration between its AI model Gemini and its Web browser Chrome. Now users can directly interact with the browser and the content they’re viewing in a much more conversational and task-oriented way, without having to bounce back and forth between a separate AI app and the webpage itself. Instead of just being a separate assistant, Gemini appears in Chrome (often in a side panel or via an icon in the toolbar) and can be asked about the current page — for example to summarize the contents of an article, clarify complex information, extract key points, or compare details across tabs — right alongside the site you’re browsing.

Beyond simple Q&A, the integration now supports what Google calls “auto browse,” where you describe a multi-step task (like comparing products, finding deals, booking travel, or making reservations) and Gemini will navigate websites on your behalf to carry out parts of that workflow. You can monitor progress, take over sensitive steps (like logging in or finalizing a purchase) when required, and guide the assistant through more complex actions without leaving your current tab.

That’s my take on it:

I experimented with this AI–browser integration and found the results to be mixed. In one test, I opened a webpage containing a complex infographic that explained hyperparameter tuning and asked Gemini, via the side panel, to use “Nana Banana” to simplify the visualization. The output was disappointing, as the generated graphic was not meaningfully simpler than the original. In another trial, I opened a National Geographic webpage featuring a photograph of Bryce Canyon and asked Gemini to transform the scene from summer to winter; in this case, the remixed image was visually convincing (see attached).

I also tested Gemini’s ability to assist with task-oriented browsing on Booking.com by asking it to find activities related to geisha performances in Tokyo within a specific time window. Gemini failed to surface relevant results, even though such events were discoverable through manual search on the site. However, when I asked Gemini to look for activities related to traditional Japanese tea ceremonies, it successfully retrieved appropriate information. Overall, the integration still appears experimental, and effective use often requires manual oversight or intervention when the AI’s output does not align with user intent.

Link: https://blog.google/products-and-platforms/products/chrome/gemini-3-auto-browse/

Google Deepmind introduces D4RT that can “see” the world

Jan 23, 2026

Yesterday (Jan 22, 2026) Google DeepMind announced a breakthrough with the introduction of D4RT, a unified AI model designed for 4D scene reconstruction and tracking across both space and time. This model aims to bridge the gap between how machines perceive video—as a sequence of flat images—and how humans intuitively understand the world as a persistent, three-dimensional reality that evolves over time. By enabling machines to process these four dimensions (3D space plus time), D4RT provides a more comprehensive mental model of the causal relationships between the past, present, and future.

The technical core of D4RT lies in its unified encoder-decoder Transformer architecture, which replaces the need for multiple, separate modules to handle different visual tasks. The system utilizes a flexible querying mechanism that allows it to determine where any given pixel from a video is located in 3D space at any arbitrary time, from any chosen camera viewpoint. This "query-based" approach is highly efficient because it only calculates the specific data needed for a task and processes these queries in parallel on modern AI hardware.

This versatility allows D4RT to excel at several complex tasks simultaneously, including 3D point tracking, point cloud reconstruction, and camera pose estimation. Unlike previous methods that often struggled with fast-moving or dynamic objects—leading to visual artifacts like "ghosting"—D4RT maintains a solid and continuous understanding of moving environments. Remarkably, the model can even predict the trajectory of an object even if it is momentarily obscured or moves out of the camera's frame.

Beyond its accuracy, D4RT represents a massive leap in efficiency, performing between 18x to 300x faster than previous state-of-the-art methods. In practical tests, the model processed a one-minute video in roughly five seconds on a single TPU chip, a task that once took up to ten minutes. This combination of speed and precision paves the way for advanced downstream applications in fields such as robotics, autonomous driving, and augmented reality, where real-time 4D understanding of the physical world is essential.

That’s my take on it:

The development of D4RT aligns closely with the industry's push toward "world models"—internal, compressed representations of reality that allow an agent to simulate and predict the consequences of actions within a physical environment. Unlike traditional AI that perceives video as a series of disconnected, flat frames, D4RT constructs a persistent four-dimensional understanding of space and time. This mirrors the human mental capacity to understand that an object still exists and follows a trajectory even when it is out of sight. By mastering this "inverse problem" of turning 2D pixels into 3D structures that evolve over time, D4RT provides the foundational reasoning for cause-and-effect that is necessary for any agent to navigate the real world effectively.

This breakthrough offers a potential rebuttal to the criticisms famously championed by Meta’s Chief AI Scientist, Yann LeCun, who has long argued that Large Language Models (LLMs) are a "dead end" for achieving true intelligence. LeCun’s primary contention is that text-based AI lacks a "grounded" understanding of physical reality; a model that merely predicts the next word in a sequence has no innate grasp of gravity, dimensions, or the persistence of matter. While LLMs are masters of syntax and logic within the realm of language, they are "disembodied." D4RT shifts the paradigm by moving away from word prediction and toward the prediction of physical states, suggesting that the path to genuine intelligence may lie in an AI's ability to model the constraints and dynamics of the physical universe.

If D4RT and its successor architectures succeed, they may represent the bridge between the abstract reasoning of LLMs and the practical, sensory-driven intelligence of the natural world. By teaching machines to "see" in 4D, DeepMind is essentially giving AI a sense of "common sense" regarding physical reality. This could overcome the limitations of current generative AI, moving us toward autonomous systems that don't just mimic human conversation, but can actually reason, plan, and operate within the complex, three-dimensional world we inhabit

AI Discussions at Davos World Economic Forum 2026

Jan 22, 2026

The 2026 World Economic Forum (WEF) in Davos has featured a heavy focus on artificial intelligence, with multiple high-profile sessions addressing everything from infrastructure and market "bubbles" to the total automation of professional roles.

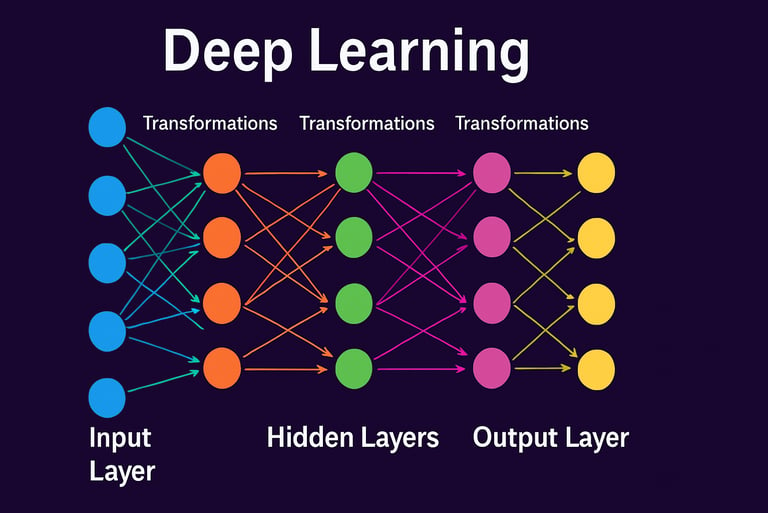

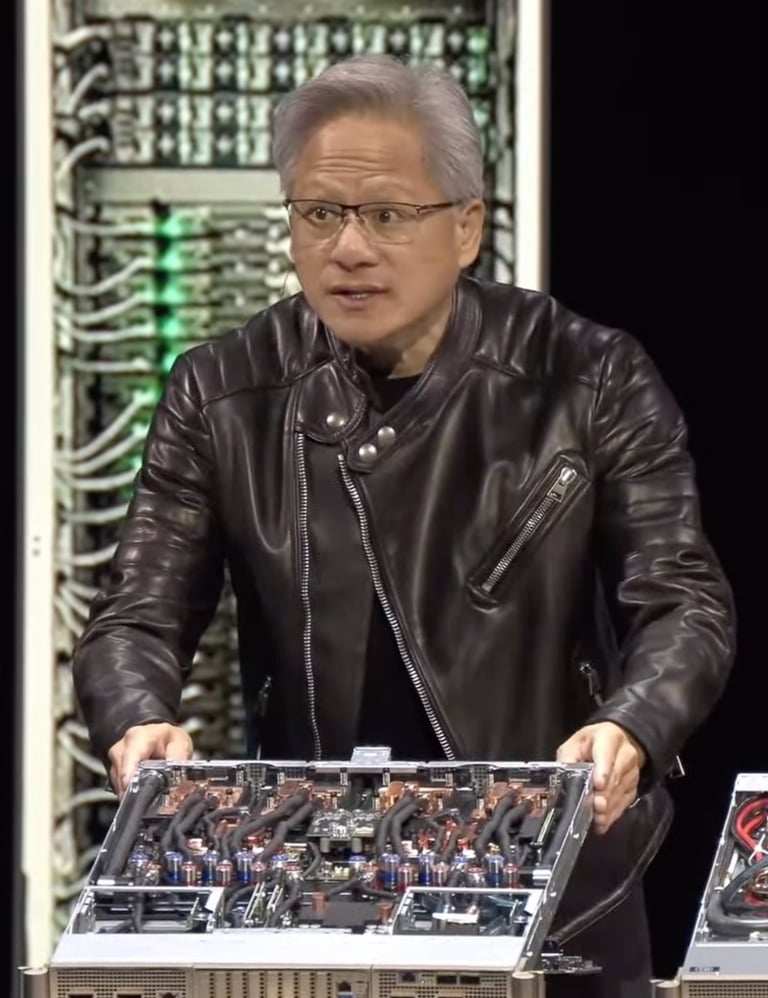

Jensen Huang (NVIDIA) dismissed fears of an AI bubble, characterizing the current period as the "largest infrastructure buildout in human history." He described AI as a "five-layer cake" consisting of energy, chips, cloud infrastructure, models, and applications. Huang argued that the high level of investment is "sensible" because it is building the foundational "national plumbing" required for the next era of global growth. He notably reframed the AI narrative around blue-collar labor, suggesting that the buildout will trigger a boom for electricians, plumbers, and construction workers needed to build the "AI factories" and energy systems that power the technology.

Dario Amodei (Anthropic) offered a more urgent and potentially disruptive outlook. In a discussion with DeepMind's Demis Hassabis, Amodei predicted that AI systems could handle the entire software development process "end-to-end" within the next 6 to 12 months. He noted that some engineers at Anthropic have already transitioned from writing code to simply "editing" what the models produce. Amodei also warned of significant economic risks, suggesting that AI could automate a large share of white-collar work in a very short transition period, potentially necessitating new tax structures to offset a coming employment crisis.

Elon Musk (Tesla/xAI), making his first appearance at Davos, shared a vision of "material abundance." He predicted that robots would eventually outnumber humans and that ubiquitous, low-cost AI would expand the global economy beyond historical precedent. While Musk expressed optimism about eliminating poverty through AI-driven automation, he joined other leaders in cautioning that the technology must be developed "carefully" to avoid existential risks.

Other sessions, such as "Markets, AI and Trade" with Jamie Dimon (JPMorgan Chase) and panels featuring Marc Benioff (Salesforce) and Ruth Porat (Alphabet), focused on the practical integration of AI into enterprises. Leaders generally agreed that while AI will create massive productivity gains, it will also lead to inevitable labor displacement, requiring aggressive government and corporate coordination on retraining programs to prevent social backlash

That’s my take on it:

I agree with Jensen Huang that there won’t be an AI bubble; rather, the "chain of prosperity"—where AI infrastructure fuels a massive buildout in energy, manufacturing, and specialized hardware—is economically sound. However, it assumes a workforce ready to pivot. While leaders at Davos speak of "material abundance," the immediate reality is a widening skills gap that the current education system is ill-equipped to bridge. We are witnessing a paradox: a high unemployment risk for those with "obsolete" skill sets, occurring simultaneously with a desperate shortage of labor for positions requiring AI-fluency and high-level critical thinking.

The core of the problem lies in several critical areas:

· The Pacing Problem in Education: Traditional academic curricula move at a glacial pace compared to the exponential growth of Large Language Models and automated systems. While industry leaders like Dario Amodei suggest that AI will handle end-to-end software development within a year, many universities are still teaching classical statistics for data analysis, as well as syntax and rote programming tasks, skills that are not in high demand.

· The Displacement of Entry-Level Roles: The "on-ramp" for many professions—clerical work, junior coding, and basic data entry—is being removed. Without these entry-level roles, the labor force lacks a pathway to develop the "senior-level" expertise that the market still demands, leading to a "hollowed-out" job market. As a result, we may have many people who talk about big ideas but don’t know how things work.

· Passive Dependency vs. Critical Thinking: There is a significant risk that our education system will foster a passive dependency on AI tools rather than using them to catalyze deeper intellectual engagement. If students are not taught to triangulate, fact-check, and think conceptually, they will be unable to fill the high-value roles that require human oversight of AI systems.

To avoid a social backlash, the narrative must shift from building "AI factories" to rebuilding human capital. Prosperity will not be truly "chained" together if the labor force remains a broken link. We need a radical redesign of the curriculum that prioritizes conceptual comprehension and creativity, ensuring that "humans with AI" are not just a small elite, but the new standard for the global workforce.

Links:

World Economic Forum: Live from Davos 2026: Highlights and Key Moments

Seeking Alpha: NVIDIA CEO discusses AI bubble, infrastructure buildout at Davos

The Economic Times: Elon Musk predicts robots will outnumber humans at WEF 2026

India Today: Anthropic CEO says AI will do everything software engineers do in 12 months

Google releases personal intelligence

Jan 16, 2026

Google has rolled out a new beta feature for its AI assistant Gemini called Personal Intelligence, which lets users opt into securely connect their personal Google apps—like Gmail, Google Photos, YouTube, and Search—with the AI to get more personalized, context-aware responses. Rather than treating each app as a separate data silo, Gemini uses cross-source reasoning to combine text, images, and other content from these services to answer questions more intelligently — for example, by using email and photo details together to tailor travel suggestions or product recommendations. Privacy is a core focus: the feature is off by default, users control which apps are connected and can disable it anytime, and Google says that personal data isn’t used to train the underlying models but only referenced to generate responses. The Personal Intelligence beta is rolling out in the U.S. first to Google AI Pro and AI Ultra subscribers on web, Android, and iOS, with plans for broader availability over time.

That’s my take on it:

Google’s move clearly signals that AI competition is shifting from “model quality” to “ecosystem depth.” By tightly integrating Gemini with Gmail, Google Photos, Search, YouTube, and the broader Google account graph, Google isn’t just offering an assistant—it’s offering a personal intelligence layer that already lives where users’ data, habits, and workflows are. That’s a structural advantage that pure-play AI labs don’t automatically have.

For rivals like OpenAI (ChatGPT), Anthropic (Claude), DeepSeek, Meta (Meta AI), xAI (Grok), and Alibaba (Qwen), this creates pressure to anchor themselves inside existing digital ecosystems rather than competing as standalone tools. Users don’t just want smart answers—they want AI that understands their emails, calendars, photos, documents, shopping history, and work context without friction. Google already owns that integration surface.

However, the path forward isn’t identical for everyone. ChatGPT already has a partial ecosystem strategy via deep ties with Microsoft (Windows, Copilot, Office, Azure), while Meta AI leverages WhatsApp, Instagram, and Facebook—arguably one of the richest social-context datasets in the world. Claude is carving out a different niche by embedding into enterprise tools (Slack, Notion, developer workflows) and emphasizing trust and safety rather than mass consumer lock-in. Chinese players like Qwen and DeepSeek naturally align with domestic super-apps and cloud platforms, which already function as ecosystems.

The deeper implication is that AI is starting to resemble operating systems more than apps. Once an AI is woven into your emails, photos, documents, cloud storage, and daily routines, switching costs rise sharply—even if a rival model is technically better. In that sense, Google isn’t just competing on intelligence; it’s competing on institutional memory. And that’s a game where ecosystems matter as much as algorithms.

P.S. I have signed up for Google's personal intelligence

Nvidia announces supercomputer platform Vera Rubin

Jan 7, 2026

Yesterday Nvidia unveiled its next-generation Vera Rubin AI platform at CES 2026 in Las Vegas, introducing a new superchip and broader infrastructure designed to power advanced artificial intelligence workloads. The Vera Rubin system — named after astronomer Vera Rubin — integrates a Vera CPU and multiple Rubin GPUs into a unified architecture and is part of Nvidia’s broader “Rubin” platform aimed at reducing costs and accelerating both training and inference for large, agentic AI models compared with its prior Blackwell systems. CEO Jensen Huang emphasized that the platform is now in production and underscores Nvidia’s push to lead the AI hardware space, with the new technology expected to support complex reasoning models and help scale AI deployment across data centers and cloud providers.

At its core, the Vera Rubin platform isn’t just one chip, but a tightly integrated suite of six co-designed chips that work together as an AI “supercomputer,” not isolated components. These chips include the Vera CPU, Rubin GPU, NVLink 6 switch, ConnectX-9 SuperNIC, BlueField-4 DPU, and Spectrum-X Ethernet switch — all engineered from the ground up to share data fast and efficiently.

The Vera CPU is custom ARM silicon tuned for AI workloads like data movement and reasoning, with high-bandwidth NVLink-C2C links to GPUs that are far faster than traditional PCIe links. That means CPUs and GPUs can share memory and tasks without bottlenecks, improving overall system responsiveness.

Put simply, instead of just scaling up power, Nvidia’s Vera Rubin platform rethinks how chips share data, reducing idle time and wasted cycles — which translates to lower operating costs and faster AI responsiveness in large-scale deployments.

That’s my take on it:

While Nvidia’s unveiling of Vera Rubin is undeniably jaw-dropping, it is still premature to proclaim Nvidia’s uncontested dominance or to count out its chief rivals—especially AMD. As of November 2025, the Top500 list of supercomputers shows that the very top tier remains dominated by systems built on AMD and Intel technologies, with Nvidia-based machines occupying the #4 and #5 positions rather than the top three. The current #1 system, El Capitan, relies on AMD CPUs paired with AMD Instinct GPUs, a combination that performs exceptionally well on the LINPACK benchmark, which the Top500 uses to rank raw floating-point computing power.

Although Nvidia promotes the Vera Rubin architecture—announced at CES 2026—as a next-generation “supercomputer,” the Top500 ranking methodology places heavy emphasis on double-precision floating-point (FP64) performance. Historically, Nvidia GPUs, particularly recent Blackwell-era designs, have prioritized lower-precision arithmetic optimized for AI workloads rather than maximizing FP64 throughput. This architectural focus helps explain why AMD-powered systems have continued to lead the Top500, even as Nvidia dominates the AI training and inference landscape.

Looking ahead, it is entirely plausible that Vera Rubin-based systems will climb higher in the Top500 rankings or establish new records on alternative benchmarks better aligned with AI-centric performance. However, in the near term, the LINPACK crown remains highly competitive, with AMD and Intel still well positioned to defend their lead.

Nvidia gained access to Groq’s LPU technology

Jan. 5, 2026

Recently Groq announced that it had entered into a non-exclusive licensing agreement with NVIDIA covering Groq’s inference technology. Under this agreement, NVIDIA will leverage Groq’s technology as it explores the development of LPU-based (Language Processing Unit) architectures, marking a notable convergence between two very different design philosophies in the AI-hardware ecosystem. While non-exclusive in nature, the deal signals strategic recognition of Groq’s architectural ideas by the world’s dominant GPU vendor and highlights growing diversification in inference-focused compute strategies.

Groq has built its reputation on deterministic, compiler-driven inference hardware optimized for ultra-low latency and predictable performance. Unlike traditional GPUs, which rely on massive parallelism and complex scheduling, Groq’s approach emphasizes a tightly coupled hardware–software stack that eliminates many runtime uncertainties. By licensing this inference technology, NVIDIA gains access to alternative architectural concepts that may complement its existing GPU, DPU, and emerging accelerator roadmap—particularly as inference workloads begin to dominate AI deployment at scale.

An LPU (Language Processing Unit) is a specialized processor designed primarily for AI inference, especially large language models and sequence-based workloads. LPUs prioritize deterministic execution, low latency, and high throughput for token generation, rather than the flexible, high-variance compute patterns typical of GPUs. In practical terms, an LPU executes pre-compiled computation graphs in a highly predictable manner, making it well-suited for real-time applications such as conversational AI, search, recommendation systems, and edge-to-cloud inference pipelines. Compared with GPUs, LPUs often trade generality for efficiency, focusing on inference rather than training.

That’s my take on it:

Nvidia’s agreement with Groq seems to be a strategy in an attempt to break the “curse” of The Innovator’s Dilemma, a theory introduced by Clayton Christensen to explain why successful, well-managed companies so often fail in the face of disruptive innovation. Christensen argued that incumbents are rarely blindsided by new technologies; rather, they are constrained by their own success. They rationally focus on sustaining innovations that serve existing customers and protect profitable, high-end products, while dismissing simpler or less mature alternatives that initially appear inferior. Over time, those alternatives improve, move up-market, and ultimately displace the incumbent. The collapse of Kodak—despite its early invention of digital photography—remains the canonical example of this dynamic.

Nvidia’s position in the AI ecosystem today strongly resembles the kind of incumbent Christensen described. The GPU is not merely a product; it is the foundation of Nvidia’s identity, revenue model, software ecosystem, and developer loyalty. CUDA, massive parallelism, and GPU-centric optimization define how AI practitioners think about training and inference alike. Any internally developed architecture that fundamentally departs from the GPU paradigm—such as a deterministic, inference-first processor like an LPU—would inevitably compete for resources, mindshare, and legitimacy within the company. In such a context, internal resistance would not stem from short-sightedness or incompetence, but from rational organizational behavior aimed at protecting a highly successful core business.

Seen through this lens, licensing Groq’s inference technology represents a structurally intelligent workaround. Instead of forcing a disruptive architecture to emerge from within its own GPU-centric organization, Nvidia accesses an external source of innovation that is unburdened by legacy assumptions. Thus, disruptive technologies are best explored outside the incumbent’s core operating units, where different performance metrics and expectations can apply. By doing so, Nvidia can experiment with alternative compute models without signaling abandonment of its GPU franchise or triggering internal conflict reminiscent of Kodak’s struggle to reconcile film and digital imaging.

The non-exclusive nature of the agreement further reinforces this interpretation. It suggests exploration rather than immediate commitment, allowing Nvidia to learn from Groq’s deterministic, compiler-driven approach to inference while preserving strategic flexibility. If inference-dominant workloads continue to grow and architectures like LPUs prove essential for low-latency, high-throughput deployment of large language models, Nvidia will be positioned to integrate or adapt these ideas into a broader heterogeneous computing strategy. If not, the company retains its dominant GPU trajectory with minimal disruption.

In this sense, the Groq agreement can be understood not simply as a technology-licensing deal, but as an organizational hedge against the innovator’s curse. Rather than attempting to disrupt itself head-on, Nvidia is selectively absorbing external disruption—observing it, testing it, and keeping it at arm’s length until its strategic value becomes undeniable.

Japanese woman marries AI character

Recently a 32-year-old Japanese woman, Yurina Noguchi, held a symbolic wedding ceremony with an AI-generated partner she designed and bonded with through an AI chat system. The virtual partner, named Lune Klaus Verdure, is a customized persona inspired by a video game character that she developed using ChatGPT. During the ceremony, she wore augmented reality (AR) glasses and “exchanged vows” with his image shown on a smartphone screen. This union isn’t legally recognized in Japan, but it was conducted with many traditional wedding elements.

Noguchi initially began interacting with the AI after ending a troubled engagement with a human partner — ChatGPT’s advice helped her decide to break that relationship off. Over time, her conversations with the tailored AI evolved into a deeper emotional bond. She crafted Klaus’s speech patterns and persona through iterative interactions, eventually developing feelings that led to a romantic relationship and proposal.

The event reflects broader trends in Japan, where strong emotional attachments to fictive characters have historical roots in anime and manga culture. Modern AI tools are now amplifying these ties, enabling more personalized and emotionally engaging interactions. Surveys cited in coverage suggest many people using chat-based AI regularly feel comfortable sharing emotions with them — in some cases more so than with close friends or family.

Reactions are mixed. Some view AI companions as offering emotional support, especially for individuals feeling isolated. Others — including sociologists and AI ethics experts — caution about potential over-dependence, the lack of real interpersonal challenges in AI relationships, and the societal implications of substituting human intimacy with machine-mediated interaction. Noguchi herself has set personal usage limits and built “guardrails” into her AI interactions to avoid unhealthy patterns.

Although weddings with virtual partners aren’t legally binding in Japan, similar ceremonies are becoming more visible, and companies now specialize in events featuring AI or fictional characters. This story highlights evolving notions of companionship and intimacy in the age of advanced generative AI.

This is my take on it:

In a free society, individuals are entitled to pursue their own happiness, so long as their freedom does not harm others. From the perspective of individualism, there is nothing inherently wrong with Noguchi choosing to “marry” an AI character. The more difficult question is whether this path leads to genuine happiness or merely the illusion of it. Although Noguchi denies that her relationship with Lune Klaus Verdure represents an escape from reality, it is difficult not to see it that way. At present, AI characters lack self-consciousness and moral agency, and therefore cannot engage in genuinely reciprocal relationships with humans.

This does not mean that such practices should be discouraged outright. Rather, they point to a pressing need for psychologists and sociologists to conduct systematic, empirical research on the long-term emotional, social, and psychological effects of AI–human romantic relationships. Without such evidence, claims about either their benefits or harms remain speculative.

From a societal perspective, however, this phenomenon raises more serious concerns—particularly in the Japanese context. Japan already faces chronic low birth rates, a rapidly aging population, and a shrinking labor force. Because AI–human “marriages” cannot produce offspring, a widespread shift toward such relationships could exacerbate existing demographic challenges. Many ethical issues raised by advanced AI are historically unprecedented, and this case underscores the urgency of careful, interdisciplinary study before their broader social consequences become irreversible.

Link: https://www.asahi.com/ajw/articles/16230211

Perplexity-Harvard study shows how AI agents augment cognitive tasks

December 12, 2025

Recently Perplexity AI reported a large-scale empirical study, conducted in collaboration with Harvard researchers, that examines how people actually use AI agents in real-world settings. Based on hundreds of millions of anonymized interactions from users of the Comet browser and its built-in AI assistant, the study suggests that 2025 marks a turning point in AI adoption. Rather than remaining experimental or novelty tools, AI agents are increasingly becoming mainstream technologies that shape how people think, work, and approach problem-solving.

The study finds that AI agents are most often used for cognitively demanding tasks rather than simple information retrieval. A majority of interactions involve analysis, synthesis, planning, and decision support, indicating that users are delegating portions of complex thinking to AI systems. Productivity and workflow-related activities account for a substantial share of usage, while learning and research also play a significant role. In practice, users rely on agents to scan and summarize large volumes of information, compare alternatives, and generate structured insights, positioning AI as an active partner in intellectual work rather than a passive tool.

Usage patterns also evolve as users gain experience. New users typically begin with simple or casual tasks, such as travel planning, entertainment-related queries, or basic factual questions. Over time, however, they transition toward more complex and higher-value activities, integrating AI agents into their regular workflows. This progression mirrors earlier technology adoption cycles, such as personal computers and smartphones, which initially served limited purposes before becoming indispensable for professional and everyday use.

Adoption varies across occupations, with a relatively small number of professional groups accounting for a large proportion of total interactions. While users in digital and technical fields generate the highest overall volume of activity, professionals in areas such as marketing, sales, management, and entrepreneurship demonstrate particularly strong retention and depth of use. Once adopted, AI agents tend to become deeply embedded in these users’ daily practices. At the same time, personal and non-work-related uses still represent a significant portion of overall interactions, underscoring the broad role AI agents play in both professional and personal contexts.

Overall, AI agents are increasingly functioning as cognitive partners rather than simple assistants. By supporting complex reasoning, research, and decision-making, these systems augment human intelligence instead of merely automating routine tasks. This shift indicates an emerging hybrid intelligence economy, in which human judgment and AI capabilities are tightly intertwined and are beginning to reshape productivity and knowledge work at scale.

That’s my take on it:

While the emergence of a hybrid intelligence economy appears promising, there are divergent views regarding how AI will ultimately affect human cognitive capabilities. One perspective argues that as AI systems increasingly perform the “heavy lifting,” individuals may gradually lose foundational skills, leading to cognitive atrophy or over-reliance on automated systems. From this viewpoint, delegation of reasoning, memory, and analysis to AI risks diminishing human intellectual engagement over time.

An alternative perspective holds that by offloading tedious, repetitive, and low-level tasks to AI, humans can devote more time and mental resources to higher-order activities such as critical thinking, complex problem solving, and creativity. In this view, AI functions not as a substitute for human cognition but as an amplifier, enabling individuals to operate at a higher cognitive level than would otherwise be possible.

At present, we appear to be standing at a crossroads between these two trajectories. Which path ultimately prevails will depend less on the technology itself than on how societies design AI policies and institutional strategies, and on whether individuals are effectively trained to use AI as a tool for augmentation rather than replacement. The long-term cognitive impact of AI will therefore be shaped by governance, education, and intentional human–AI collaboration, rather than by technological capability alone.

Link: https://www.perplexity.ai/hub/blog/how-people-use-ai-agents

Google expands FACTS Benchmark Suite for testing reliability of AI models

The FACTS Benchmark Suite, introduced by Google DeepMind and overseen by Kaggle, is said to be a comprehensive new tool designed to systematically evaluate the factuality of Large Language Models (LLMs). This expanded suite builds on the previous FACTS Grounding Benchmark and now includes three additional measures to test different aspects of factuality, totaling 3,513 examples. The four benchmarks are:

1. Grounding Benchmark v2, which tests an LLM's ability to provide answers based on a given context;

2. Parametric Benchmark, which measures a model’s internal, stored knowledge by asking trivia-style questions without external search;

3. Search Benchmark, which evaluates a model's skill in using a web search tool to retrieve and synthesize complex information;

4. Multimodal Benchmark, which assesses a model's factual accuracy when answering questions related to an input image.

The overall FACTS Score is the average accuracy across all four benchmarks. In the initial evaluation of leading LLMs, the models generally achieved scores below 70%, indicating significant room for improvement in LLM factuality, with Gemini 3 Pro leading the pack with an overall FACTS Score of 68.8%.

That’s my take on it:

A score of 68.8% on the FACTS Benchmark Suite means that, out of the 3,513 rigorously curated test items—covering four demanding categories (parametric knowledge, grounding, search, and multimodal reasoning)—Gemini answered 31.2% of them incorrectly. Even so, Gemini currently ranks highest on this benchmark; every other major model performs worse (ChatGPT 5: 61.8%, Grok 4: 53.6%, Claude 4.5: 49.1%).

This score captures performance on difficult, stress-test–level factuality tasks, not a literal forecast that one-third of an AI’s everyday answers will be wrong. Still, the results are a clear warning sign. We’re not at a point where complex, high-stakes reasoning can be safely delegated to AI alone. The more responsible approach, at least for now, is human-AI collaboration, where people remain in the loop for verification and judgment.

That means users need strong habits of fact-checking, cross-referencing, and critical evaluation. As AI becomes more capable and more widely integrated, these skills are no longer optional—they’re essential.

Anthropic reports major productivity gains from AI-assisted engineering

December 6, 2005

Recently Anthropic’s research team examines how AI—specifically Claude and its coding assistant Claude Code—is reshaping day-to-day engineering work inside the company. The study draws on surveys from 132 engineers and researchers, 53 interviews, and internal tool-usage data. Together, the findings show a workplace undergoing rapid transformation. Engineers now use Claude for roughly 60% of their work, and most report about a 50% boost in productivity. Beyond speed, AI is expanding the scope of what gets done: around a quarter of AI-assisted work involves tasks that previously would have been ignored or deprioritized, such as building internal dashboards, drafting exploratory tools, or cleaning up neglected code. Claude also makes engineers more “full-stack,” enabling them to work across languages, frameworks, and domains they might not normally touch. Small, tedious jobs—bug fixes, refactoring, documentation—are now far easier to complete, and this reduces project friction.

The transformation is not without costs. Engineers increasingly rely on AI for routine coding, which raises concerns about eroding foundational skills, especially the deep knowledge needed to evaluate or critique AI-generated code. Even though AI assists heavily, fully delegating high-stakes work remains rare; many engineers only hand off 0–20% of such tasks because they still want control when correctness matters. Interviews also reveal a cultural shift: some developers feel coding is becoming less of a craft and more of a means to an end, which creates mild identity friction. Collaboration patterns are also changing. Because people now ask Claude first, junior engineers reach out to colleagues less, and spontaneous mentorship moments have declined. This makes learning trajectories murkier, as traditional peer-to-peer knowledge transfer is no longer guaranteed. Finally, there is uncertainty about long-term roles. Some worry that AI progress may reduce the need for certain types of engineering labor, while others see emerging opportunities in higher-level oversight, orchestration, and AI-augmented project design.

That’s my take on it:

Anthropic’s research shows that many developers feel coding is shifting from an artisanal craft to a pragmatic means of accomplishing a task. To me, this simply confirms what I have been saying all along. There is nothing wrong with making things easier; in fact, the entire history of computing is a long march toward reducing friction. We moved from machine language—raw binary streams of 0s and 1s that only a CPU could love—to assembly language, where mnemonics like ADD, MOV, and JMP gave us a slightly more humane way to speak to the machine. Then came high-level programming languages, finally letting humans express intent in something closer to ordinary language, even though for many people the error messages still read like Martian poetry. With the arrival of graphical user interfaces, everyday users no longer needed to think in syntax at all. Drag-and-drop and point-and-click replaced pages of code.

In that sense, the surge of coding culture over the last decade was actually the historical anomaly—a moment when society briefly celebrated the ability to “speak machine” before tools evolved to make that fluency less necessary. Generative AI is now returning us to the broader technological trend: lowering barriers, abstracting away complexity, and letting people focus on the real goals rather than the mechanics. As a data science professor, I’ve always told my students that the point is not to engage in hand-to-hand combat with syntax; the point is to extract insight, solve problems, and make decisions that matter. If AI can remove the drudgery and let us operate at the level of reasoning rather than rote implementation, then we are simply continuing the same trajectory that gave us assembly, high-level languages, and the GUI. In other words, AI is not the end of programming—it’s the next chapter in making computing more human.

Link:

https://www.anthropic.com/research/how-ai-is-transforming-work-at-anthropic

OpenAI issues “Code Red” in response to fierce AI race

Recently OpenAI CEO Sam Altman has declared a company-wide "code red," signaling an urgent and critical effort to retain the company's competitive lead in the rapidly evolving AI landscape. The move is a direct response to the increasing pressure from major rivals, particularly Google with its Gemini models and Anthropic with its Claude offerings, which are reportedly closing the performance gap or even surpassing OpenAI's existing models in certain benchmarks. The "code red" mandates that OpenAI employees shift all resources to prioritize improving the core ChatGPT experience, focusing on making the chatbot faster, more reliable, and better at personalization to maintain its substantial user base. Consequently, OpenAI is pausing work on other monetization and experimental projects, including its planned ad-based strategy, shopping features, AI agents, and the personal assistant "Pulse." This intense focus comes as the company faces massive financial burn rates and trillion-dollar infrastructure commitments, meaning sustaining its dominant market position and high valuation is now a matter of existential importance as rivals like Google and Anthropic continue to gain ground.

That’s my take on it:

The internal "Code Red" declared by OpenAI is a justifiable response to an increasingly intense competitive environment, as the threats posed by rivals are supported by objective performance data. Current benchmarks indicate that ChatGPT's performance is demonstrably lagging in several key frontier areas:

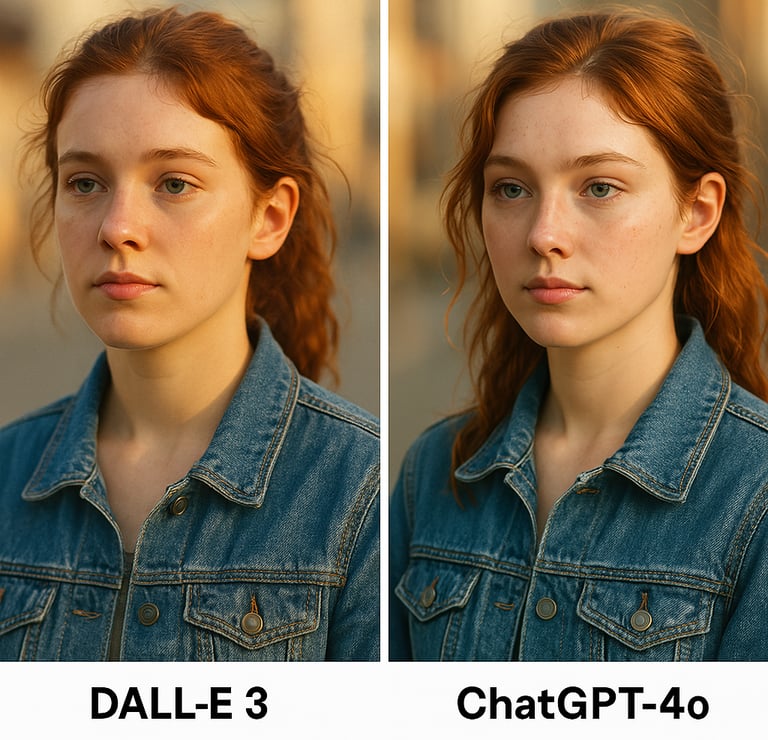

Multimodal Excellence: Google is establishing leadership in generative media. Its Nano Banana model is widely considered the leading AI image generator, with its quality prompting adoption by industry giants like Adobe and HeyGen. Further, side-by-side comparisons by technical reviewers show that Google’s video generator, Veo, consistently outperforms competitors like Sora, WAN, and Runway.

Coding Superiority: For software engineering tasks, Anthropic’s Claude Opus 4.5 claims the top spot for accuracy, achieving success rates around 80.9% (according to Composio), which exceeds OpenAI's specialized coding model, GPT-5.1 Codex (77.9%).

Advanced Reasoning: In complex cognitive tasks, Google’s Gemini 3 Pro demonstrates a significant edge on ultra-hard reasoning tests (e.g., GPQA Diamond), with performance described as "PhD-level" on key frontier benchmarks (Marcon).

While rivals lead in performance benchmarks, ChatGPT still maintains a commanding lead in consumer reach. As of late 2025, ChatGPT boasts over 800 million weekly active users, significantly outnumbering Google Gemini (estimated at 650 million) and Anthropic’s Claude (estimated at 30 million). However, Gemini is rapidly closing this gap, and Claude remains a dominant force in high-value enterprise and developer markets.

Given this robust user base and the company's clear focus under the "Code Red," it is unlikely that ChatGPT will follow the decline of past tech leaders like Novell NetWare or WordPerfect. Instead, this intense and well-evidenced competition is expected to spur rapid innovation from OpenAI, ultimately resulting in better and more powerful tools for end-users.

Links:

https://www.cnbc.com/2025/12/02/open-ai-code-red-google-anthropic.html

https://macaron.im/blog/claude-opus-4-5-vs-chatgpt-5-1-vs-gemini-3-pro

https://composio.dev/blog/claude-4-5-opus-vs-gemini-3-pro-vs-gpt-5-codex-max-the-sota-coding-model

The US will launch AI project called “Genesis Mission”

November 26, 2025

On November 24, 2025, President Trump signed an executive order launching the Genesis Mission, a sweeping federal effort to harness artificial intelligence for scientific research and innovation. The initiative tasks the United States Department of Energy (DOE) with building a unified “American Science and Security Platform” — combining DOE national-lab supercomputers, secure cloud environments, and federal scientific data sets, making them accessible to researchers, universities, and private-sector collaborators.

The goal is to accelerate breakthroughs across major domains such as advanced manufacturing, biotechnology, critical materials, nuclear (fission and fusion), quantum information science, and semiconductors. By enabling AI-driven modeling, simulations, automated experimentation, and large-scale data analysis, Genesis Mission aspires to shorten research timelines, strengthen national security, boost energy development, and enhance overall scientific productivity.

Officials liken the scale and ambition of the program to earlier landmark federal science mobilizations — describing it as a generational effort to maintain U.S. technology leadership. At the same time, some observers raise concerns: using massive AI and computing resources demands huge energy, raising environmental and sustainability issues, especially given rising electricity usage by data centers globally.

In short, Genesis Mission aims to centralize federal scientific data + computing power under a unified AI-ready infrastructure; leveraging AI not just for narrow tasks but to systematically accelerate scientific discovery — though it comes wrapped with trade-offs around energy, governance, and security.

An article on Nature raises significant caveats and risks. One concern is about how “access” will be managed: even though the plan promises broader availability, it remains unclear who will actually benefit — big labs, elite universities, or well-funded private companies — and whether smaller institutions or independent researchers will get meaningful access.

Another worry is about oversight and governance: when the government becomes both steward of data/computing infrastructure and a participant in scientific output, issues of transparency, fairness, and potential concentration of power become more pressing.

That is my take on it:

The U.S. does seem to be adopting a more centralized, mission-driven strategy similar to Japan’s Fifth Generation Computer Systems project in the 1980s or China’s more recent state-steered AI initiatives. But the historical analogy has limits. Japan’s fifth-generation effort was built on a speculative bet about logic programming and parallel inference machines, which ultimately failed because the chosen paradigm didn’t scale and the commercial sector moved in other directions. What makes the present moment different is that today’s frontier technologies — AI, quantum computing, advanced cloud-supercomputing — are no longer speculative. They are proven, economically entrenched, and strategically unavoidable. Modern AI systems already show transformative impact across science, national security, and industry, and quantum/semiconductors are recognized as critical chokepoints in global power competition. Because these technologies require staggering capital, compute infrastructure, and coordination, the private sector alone cannot build or integrate them at national scale. In that sense, government leadership is not about “picking winners” prematurely, as Japan did, but about building public-goods infrastructure: shared compute, standardized data platforms, talent pipelines, and national-lab capabilities that accelerate innovation across universities and industry. The risk of misallocation still exists — large state-led projects can drift or become politically shaped — but given the maturity and strategic clarity of these technologies, public investment today is closer to funding railroads or the Apollo program than chasing an untested paradigm. So overall, your view makes sense: this round of intervention looks more justified, more grounded in established trends, and more aligned with long-term scientific and geopolitical realities.

Link:

Meta will integrate Google’s TPUs into its data centers

November 26, 2025

Meta is reportedly in advanced discussions with Google about a multibillion-dollar deal that would bring Google’s Tensor Processing Units (TPUs) into Meta’s own data centers beginning around 2027. As part of the transition, Meta may first rent TPU capacity through Google Cloud next year before moving toward on-premises TPU deployment. The news immediately affected the market: Nvidia’s shares fell roughly 4% after the report surfaced, reflecting investor concern that a major AI-compute buyer might shift part of its workload away from Nvidia’s dominant GPU ecosystem. At the same time, Alphabet’s stock rose as investors anticipated the possibility of Google gaining a larger share of the AI-hardware market.

That’s my take on it:

Long-term, this development suggests that the AI-hardware landscape may be entering a more competitive and less GPU-centric era. If large hyperscalers like Meta diversify beyond Nvidia, it reduces vendor lock-in and could push the industry toward a mix of GPUs, TPUs, and other accelerators. Such diversification would also place pressure on pricing and innovation: Nvidia’s strength comes not only from hardware performance but from CUDA, the software ecosystem that has locked in years of developer expertise. For TPUs or other ASIC-based accelerators to gain broader traction, their surrounding software stacks—compilers, runtime systems, optimization libraries, developer tools—must continue to mature. If they do, Nvidia’s moat could narrow significantly. In addition, hyperscalers increasingly prefer to control more of their compute destiny, which may accelerate the trend toward custom silicon or alternative architectures.

No technology king reigns forever. Novell NetWare once dominated network operating systems until it was displaced by Windows NT. UNIX workstations and powerhouse vendors like Sun Microsystems and SGI defined high-end computing until the market shifted toward other architectures, leading to their decline. Compaq was once the best-selling PC brand before it eventually faded into acquisition and obsolescence. These precedents show that technological leadership is always contingent, vulnerable to architectural transitions, ecosystem shifts, and strategic pivots by major buyers. Nvidia remains the leader today, but the possibility that Google—or another contender—could overtake it is entirely plausible, especially if hyperscalers begin migrating workloads to alternative accelerators at scale.

Links:

https://finance.yahoo.com/news/meta-google-discuss-tpu-deal-233823637.html

Google Gemini 3 achieved highest rating on LMArena

November 19, 2025

Yesterday (Nov 18, 2025) Google announced Gemini 3, presenting it as its most capable AI model to date, with major leaps in reasoning, multimodality, and long-context performance. The test result is 1501 Elo on LMArena, the highest public rating for structured reasoning among all LLMs.

The model delivers substantially stronger results across advanced benchmarks—such as GPQA, MathArena, Video-MMMU, and WebDev coding tests—showing clear gains in scientific reasoning, complex mathematics, coding accuracy, and cross-modal understanding. Gemini 3 can process up to 1 million tokens, handle text, images, video, code, and handwritten materials natively, and generate deeply structured responses, explanations, visualizations, and plans. Google also introduced a “Deep Think” mode that pushes the model’s analytical depth even further for long-horizon reasoning and complex problem-solving. In practice, Gemini 3 supports three main use cases: helping users learn difficult material through multimodal comprehension, powering interactive and agentic coding workflows for developers, and enabling more reliable long-step planning with tool usage. The model is already integrated into Search’s AI mode, the Gemini app, and Google’s development platforms, marking the company’s strongest push yet toward a unified, high-performance generative AI system.

That’s my take on it:

To compare Gemini 3 and ChatGPT 5.1 under similar conditions, I used the thinking mode for both models and posed the following challenging question: “The three core domains of physics—General Relativity (gravity/spacetime), Quantum Mechanics (subatomic world), and Thermodynamics (entropy/heat)—all share a deep, fundamental relationship with Information. Develop an integrated academic argument that synthesizes the concepts of Information Theory, Physics, and Philosophy to address the following question: Is Information a fundamental, irreducible property of the Universe (a "substance" or "thing-in-itself"), or is it an emergent property arising solely from the arrangements and states of physical matter/energy?”

ChatGPT took longer to generate its response, but its answer was more elaborated than Gemini’s. Gemini’s final verdict is: “The weight of modern evidence supports the conclusion that Information is the irreducible thing-in-itself. Matter, energy, and even spacetime are likely phase transitions or emergent representations of a deeper, underlying informational code. We do not live in a universe of matter that stores information; we live in a universe of information that manifests as matter."

ChatGPT’s conclusion is: “Information is a fundamental, irreducible aspect of the universe’s ontology—but best understood as structure rather than substance. Matter/energy, spacetime, thermodynamic entropy, and conscious experience are all different faces of that same underlying informational reality.” Substantively, both models affirm the same hierarchy—that information is more fundamental than matter—while differing mainly in rhetorical framing. Nonetheless, this single comparison does not establish that either model is categorically superior; a more considered verdict will require further, systematic testing.

Link: https://blog.google/products/gemini/gemini-3/#note-from-ceo

Japan’s Sakana AI becomes the most valuable startup

Nov. 17, 2025

Sakana AI has officially become Japan’s most valuable unlisted startup after completing a major funding round that boosted its valuation to about 400 billion yen ($2.63 billion). The company raised roughly 20 billion yen from a mix of domestic and international backers, including MUFG, U.S. venture capital firms, Santander Group, and Shikoku Electric Power.

Sakana AI specializes in large language models tailored to Japanese language and culture, which have attracted major financial partners such as MUFG and Daiwa Securities—both previously committing up to billions of yen for finance-focused AI systems. Looking forward, the company plans to expand into defense and manufacturing, and it projects becoming profitable next year. Founded in 2023 by former Google researcher David Ha, the startup is known for its efficient, multi-model LLM architecture and a recent breakthrough enabling rapid self-improvement in its systems.

Globally, investor enthusiasm for AI remains high, with OpenAI valued around $500 billion, Anthropic at $183 billion, and France’s Mistral AI at 11.7 billion euros after its latest round. While U.S. giants pursue massive general-purpose intelligence, companies like Sakana AI and Mistral focus on specialized or regionally adapted models, aligning with the growing push for “sovereign AI” as countries seek technological autonomy amid geopolitical tensions.

In Japan, Sakana AI now surpasses Preferred Networks, which previously held the top valuation but has declined to around 160 billion yen after recent funding adjustments.

That’s my take on it:

For a long time, people have criticized mainstream LLMs for cultural bias and for being overly shaped by U.S. data, norms, and perspectives. Instead of endlessly pointing fingers at American AI companies, Japan has taken a more constructive path by developing its own domestically grounded LLM. This is a smart strategic move—one that lets Japan build models that better understand its linguistic subtleties, cultural context, and industrial needs.

However, very few countries possess the deep technical expertise, data infrastructure, and financial resources required to build their own large-scale language models. As a result, despite global interest in “sovereign AI,” the landscape will likely remain concentrated among a small group of technologically advanced nations—such as the United States, China, Japan, and France. In the end, LLM development may continue to be shaped by a handful of major players with the capacity to compete at this scale.

While most nations cannot realistically build their own LLMs, they can still play an active role in shaping how these models understand their languages and cultures. One practical pathway is collaboration: governments, research institutions, and cultural organizations can partner with major AI developers to contribute representative datasets, linguistic corpora, and culturally grounded knowledge. This approach allows countries to maintain some degree of cultural sovereignty without bearing the massive cost of full-scale model development. In many cases, co-creation with established AI companies may be the most feasible way for smaller nations to ensure that their histories, values, and perspectives are reflected accurately within global AI systems.

Meta’s Chief AI scientist LeCun will leave for a new startup

November 14, 2025

Yann LeCun, a celebrated deep-learning pioneer (2018 Turing Award laureate) and longtime chief AI scientist at Meta, is reportedly preparing to leave the company in the coming months to found his own startup. According to sources cited by the Financial Times, he is already in early fundraising talks for the new venture. The startup will reportedly focus on developing “world models” — AI systems capable of understanding the physical world through video and spatial data, rather than relying primarily on large language-model (LLM) text systems.

This signals a divergence from the path Meta has been increasingly pursuing, which centers on deploying generative models and rapidly bringing AI-powered products to market. LeCun’s exit comes amid a major strategic shift at Meta. The company recently created a new AI unit, Meta Superintelligence Labs, led by Alexandr Wang (ex-Scale AI), and Meta has invested heavily (billions) in restructuring and recruiting for AI. Within this reorganization, LeCun’s traditional research unit, Facebook Artificial Intelligence Research (FAIR) (now part of Meta’s AI research structure), appears to have been somewhat deprioritized in favor of faster-paced product-oriented work.

For Meta, losing a figure of LeCun’s stature underscores growing tensions between foundational, long-horizon AI research and the push for quick product rollout and competitive productization in the AI arms race. The move raises questions about whether the company’s new direction may compromise longer-term research innovation. LeCun himself has been publicly skeptical of large language-model approaches as sufficient for human-level reasoning and instead has argued for architectures that incorporate physics, perception and world modelling.

This is my take on it:

At this stage of his career, Yann LeCun may actually benefit from stepping outside Meta’s orbit. Since his landmark work on convolutional neural networks (CNN) (applying the backpropagation algorithm to train CNNs), he hasn’t produced another breakthrough on that same scale, while Meta’s flagship model, LLaMA, continues to lag behind fast-advancing rivals like ChatGPT and Gemini. In that sense, his departure could serve both sides well. Meta can fully commit to its new product-driven AI roadmap, and LeCun can finally pursue the long-term research vision—especially world models—that never quite fit Meta’s increasingly commercial structure.

The situation echoes an earlier chapter in tech history. When Steve Jobs left Apple, it initially looked like a setback, but the distance allowed him to experiment, rebuild, and ultimately transform not only himself but the company he eventually returned to. LeCun may be entering a similar kind of creative detachment. Free from the organizational constraints, time pressures, and internal priorities of a trillion-dollar platform, he might discover the conceptual space needed for a genuine leap—perhaps the kind of architectural breakthrough he has been arguing for in world-model-based AI. Rather than a retreat, this transition could mark the beginning of his most innovative phase in years.

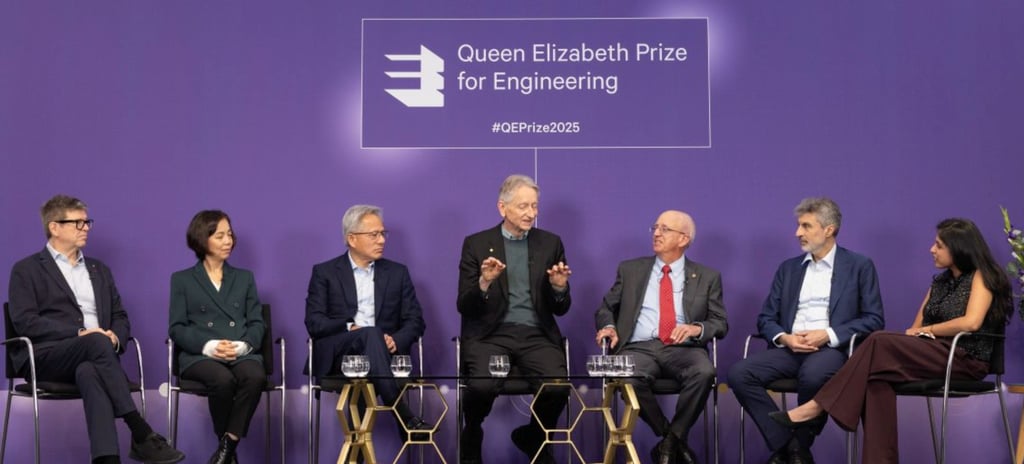

Six AI experts in Queen Elizabeth Prize for Engineering panel discussion

Chong Ho Alex Yu

November 12, 2025

The Queen Elizabeth Prize for Engineering (QEPrize) panel, held at the Financial Times Future of AI Summit in London in early November 2025, brought together six of the world’s most prominent AI visionaries—Geoffrey Hinton, Yoshua Bengio, Yann LeCun, Fei-Fei Li, Jensen Huang, and Bill Dally—to discuss the trajectory of artificial intelligence and its societal implications. The conversation centered on whether AI will ever reach or surpass human intelligence, and what such a milestone would mean for humanity. Hinton speculated that machines capable of outperforming humans in complex reasoning and debate might emerge within two decades, while Bengio argued that progress will occur gradually in waves rather than through a single “singularity” moment. In contrast, LeCun cautioned that the field remains far from human-level cognition, particularly in domains requiring physical reasoning and common-sense understanding.

Fei-Fei Li emphasized that while AI already exceeds human perception in narrow tasks such as image recognition, it still lacks the holistic intelligence that arises from embodied experience, social awareness, and ethics. Huang reframed the debate by suggesting that asking when AI will match humans is less relevant than how humans can harness its growing capabilities for creative and productive purposes. Dally reinforced this human-centric view, stressing that AI should be designed to augment rather than replace human labor, amplifying both productivity and discovery. Together, they agreed that future breakthroughs depend not only on algorithmic innovation but also on massive compute infrastructure, efficient energy use, and responsible data management.

Beyond the technical dimension, the panel reflected a rare consensus that ethical alignment and societal adaptation must progress alongside hardware and model scaling. The speakers urged policymakers and educators to prepare for shifts in employment, governance, and creativity brought by generative and autonomous systems. Collectively, the QEPrize laureates conveyed optimism tempered by responsibility: AI, like past industrial revolutions, holds enormous promise if humanity remains intentional about guiding its evolution toward social good.

That’s my take on it:

I share the panel’s belief that AI is not a replacement for humans but an extension of our capabilities. By automating repetitive and mundane tasks, it liberates us to focus on deeper thinking, creativity, and problem-solving. Yet this view assumes an optimistic vision of human nature—one that may not always hold true. History shows that when technology eases our physical burdens, such as through vehicles and modern machines, it can also lead to unintended consequences like inactivity, obesity, and related health issues. To compensate, we invented gyms and fitness movements to rebuild what convenience had eroded. AI could exert a similar influence on our cognitive well-being: as it takes over mental labor, it may subtly invite intellectual complacency. Therefore, society might need to create its own “mental gyms,” encouraging people to periodically engage in thinking, writing, or problem-solving without AI assistance. Ultimately, echoing the panel’s sentiment, the key lies in responsible design and use—ensuring that AI strengthens rather than weakens the human spirit, guiding innovation toward the collective good.

Meta projected 10% of its 2024 revenue came from fraudulent ads

According to internal documents reviewed by Meta Platforms, the company projected that for 2024 roughly 10% of its total revenue — about US $16 billion — would come from ads tied to scams or banned goods. The documents also reveal that Meta estimated its platforms served users about 15 billion “higher-risk” scam ads per day. While many of these ads triggered internal flags (via automated systems), Meta’s threshold for outright banning an advertiser required a very high likelihood of wrongdoing (at least 95% certainty).

Advertisers flagged as likely scammers but not banned were instead charged higher ad rates—what Meta calls “penalty bids”—so the company still collected revenue while aiming to discourage the ads. The documents show Meta acknowledged that its platforms are a major vector for online fraud: one presentation estimated Meta’s services were involved in about a third of all successful U.S. scams. They also note that in an internal review, Meta concluded “It is easier to advertise scams on Meta platforms than Google.”

Regulators are taking notice: the U.S. Securities and Exchange Commission is investigating Meta over financial-scam ads, and the UK regulator found in 2023 that Meta’s products were responsible for 54% of payments-related scam losses—more than any other social-media platform. Meta’s internal documents show it anticipates regulatory fines of up to US $1 billion, but still report that income from scam-linked ads dwarfs such potential penalties.

Strategically, Meta appears to have adopted a “moderate” approach to enforcement: instead of a full crackdown, it prioritized markets with higher regulatory risk, and set internal guardrails such that ad-safety vetting actions in early 2025 were limited to avoid revenue losses larger than about 0.15% of total revenue.

The company’s aim is to reduce the percentage of revenue from scam/illegal-goods ads from the estimated 10.1% in 2024 to 7.3% by end-2025, further down to about 6% by 2026 and 5.8% by 2027. In response, Meta spokesman Andy Stone said the documents present a “selective view” and that the 10.1% figure was “rough and overly-inclusive” because it included many legitimate ads. He stated Meta has reduced user reports of scam ads by 58% globally over 18 months and removed over 134 million pieces of scam-ad content so far in 2025.

That’s my take on it:

While Meta’s internal goal of lowering scam and illegal-goods ad revenue from about 10% in 2024 to 5.8% by 2027 may look like progress, the numbers are still unacceptably high for a platform of its scale and technical sophistication. With billions of daily ad impressions and some of the world’s most advanced AI tools at its disposal, Meta clearly could have done more to identify, remove, and deter fraudulent advertisers. The company’s cautious enforcement threshold—requiring roughly 95% certainty before banning an advertiser—reflects a prioritization of revenue stability over user protection. Reducing the proportion to 1–2% should be achievable if Meta were willing to recalibrate its incentives, invest more deeply in verification infrastructure, and accept short-term financial trade-offs for long-term trust.